Demystifying algorithms in digital products

Core opportunities, ways of working and strategic north stars

Algorithms, more specifically machine learning algorithms, play a pivotal role in many online businesses including Netflix, Amazon, and even direct-to-consumer (DTC or D2C) brands such as HelloFresh and Daily Harvest that sell directly to their customers instead of going through wholesalers or retailers.

These companies have access to data that enable them to deliver outstanding, personalized customer experiences that support effortlessly finding products you’ll love. The core opportunities for algorithms that leverage those data can be roughly categorized into algorithmic components, algorithmic construction, and algorithmic content optimization - we’ll explore what they are, why we need them and how they differentiate the business and make customers happy.

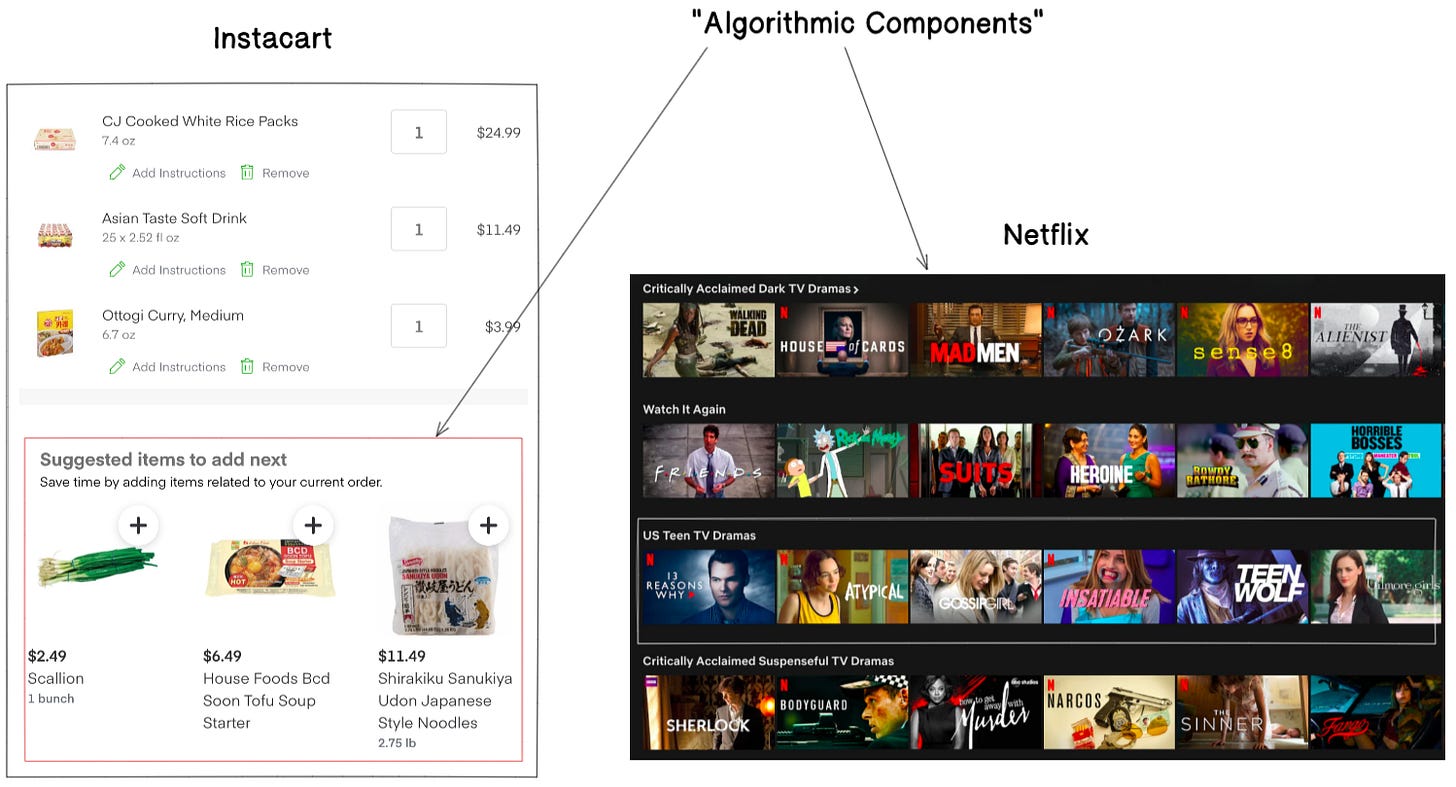

Algorithmic Components

Algorithmic components are embedded in digital products to introduce personalized content and experiences. For example, Instacart has algorithmic upsell and cross-sell within cart that recommends products related to your current order to help you build your cart quicker. At Netflix, everything is a recommendation. The entire page is organized as a grid of recommended contents. Each carousel is an algorithmic component.

What underlies these algorithmic components is the theme that every customer is an individual - the optimal contents of an algorithmic component depends on context and changes in time. Algorithms allow us to continuously optimize the experience in an ongoing, efficient and scalable way that is unique for every customer.

Algorithmic components are evaluated and optimized by their impact on the customer and the business and their direct impact on KPIs (revenue, consumption, etc.) through continuous experimentation.

It’s worthwhile noting that not everything is an algorithmic component! Page flow, content and editorial components exist alongside algorithmic components. Some components may just be for marketing purposes.

Algorithmic components can play specific roles in digital experiences - having a theme or optimizing for a desired behavior e.g. new user acquisition or new product trial. Creation of new algorithmic components should be a partnership (even the core of the partnership) among data science, merchandising, product and engineering as we design new experiences.

Most importantly, algorithms need to learn from and iterate based on customer interaction and outcomes. Customers need to be able to interact with algorithmic components without intermediation e.g. downstream filtering and tweaking by another team that makes adjustments to what products show up and the order they appear in. Otherwise the feedback loop starts to break, and it will be difficult for algorithms to consistently and correctly learn from customer interaction and outcomes.

For example, if the algorithm developer makes a change to the underlying algorithm of a module or component in the experience, but another team (unknowingly) tinkers with a business rule that overwrites the outcome of the change shown to the end users, how will the algorithm developer correctly measure and consistently attribute the impact of the specific change it has made to the algorithm? This kind of division of ownership creates a significant overhead that makes it difficult to evaluate the performance of an algorithmic component and improve it over time.

This has a huge implication for the algorithm developer:

To be responsible for the performance of an algorithmic component, the algorithm developer needs to own it all the way to the customer

Feedback or suggestions from other teams are important and welcomed, but the algorithm developer, either a data scientist or a machine learning engineer, needs to own the algorithms all the way to the customer. What works best is empirical, not editorial decision, and should be evaluated algorithmically rather than manually.

Ways of working rule of thumb:

Align on the KPI and let the algorithms do the rest (and hold them accountable)

Ultimately, data science changes the way we operate and the way we engage with our customers. While merchandising, product and engineering teams are key partners in designing new experiences and finding new ways to leverage algorithms, they should not be dictating how algorithms should be used and how they are improved. Instead, we should align on business problem and the KPI that the algorithms will be optimizing in an algorithmic component and hold them accountable.

Algorithmic Construction

Algorithms can be used to construct digital experiences as well. For example, what’s the optimal component to show a customer after they were happy with their product? Disappointed by a product? Disappointed by two products consecutively?

At Netflix and Spotify, for example, algorithms are used both to decide what’s in a row and what rows to show. A unique permutation of rows (shelves) and contents (tiles) in a row based on everything we know about the customer (e.g. past consumption history, engagement with contents, time of the day, device) creates a personalized home page that can scale to millions of customers.

In contrast, merchandising would try to come up with a set of seemingly intricate business rules defining the criteria for what, when and how to show to a subset of users. These business rules could range from “show new movies in the top carousel to users that visited the home page at least once in the last 30 days” to “when a user gives a thumbs up to The Office, recommend Space Force.“ You could imagine how creating and managing hundreds and thousands of these rules can quickly get out of hand. Manual exploration of what works and doesn’t for each user or even a subset of users by adding and updating business rules is just too costly to even invest engineering and data science time and efforts considering the scale of the users and the contents and the rate at which these contents are consumed by the users.

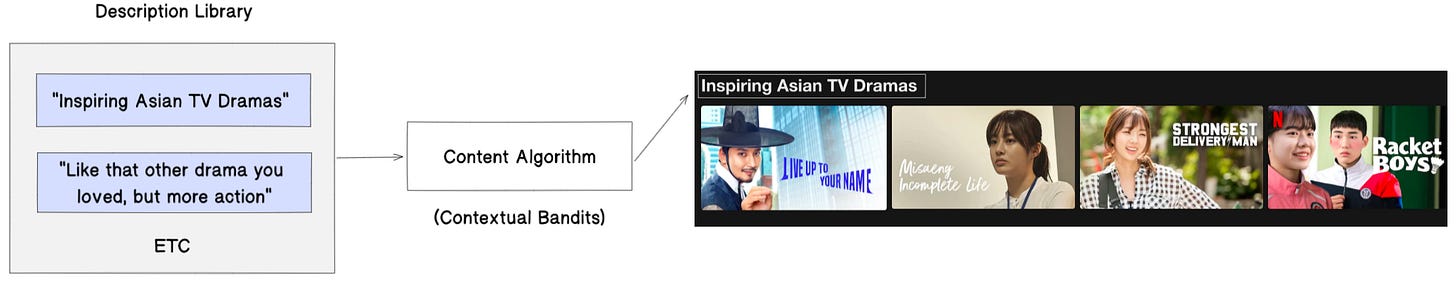

Algorithmic Content Optimization

An important special case of an algorithmic component is optimizing over a library of (potentially personalized) content. For example, for a product, what image and copy should we use to represent it? There is no need to make only one choice or to pick rules using a sequence of tests because the optimal image and description may differ by customer and over time. There will seldom be a right answer that always remains right - we should continuously optimize and explore over a library of options to cater to diverse tastes and preferences and ever-changing customer needs and wants.

Netflix uses algorithms (contextual bandits) to choose the box-art to display and even to generate it:

“[G]iven the enormous diversity in taste and preferences, wouldn’t it be better if we could find the best artwork for each of our members to highlight the aspects of a title that are specifically relevant to them?”

Managing an ever-growing tree of business rules manually (a.k.a. rules engine) to decide what image and copy to show to a subset of users for every content is extremely inefficient and costly and lends itself to a highly unstable decision making that is subject to a lot of noise. Algorithms are better at understanding latent tastes and preferences of customers and characteristics of contents and capturing complex interactions among them that manual tinkering of business rules simply cannot.

Strategic and Ways of Working North Stars

So what do all these mean for how we should evaluate and optimize algorithmic products? How should teams collaborate with data science?

Digital experiences should be evaluated empirically

Teams should align on business problem instead of solutions where most alignment should be on KPIs. Experimentation enables low-stakes, low-alignment exploration of different solutions.

Iteration and continuous optimization

The path to great consumer (and algorithmic) products is (continuous) experimentation and iteration. We should be biased towards testing-and-learning over upfront design and optimization.1

While running a sequence of tests helps us choose a generic, one-size-fits-all (sometimes misleading) outcome that applies to everyone, a contextual bandit engine, which is an extension of a multi-armed bandit framework, delivers the optimal variation for each individual that is continuously updated over time.

Build components and interfaces to enable autonomous iteration

Aim to be highly aligned, loosely coupled - highly aligned on what we are optimizing and loosely coupled on how we do it. For example, algorithm developers should be able to independently improve algorithmic components without close coordination with other teams.

More specifically, we should invest in interfaces (usually APIs) that enable decoupled iteration across teams where engineering and product can iterate on experiences and implementations and data science can iterate on algorithms. We should create leverage through re-usability by avoiding creating many bespoke integrations between data systems and engineering systems.

Algorithm developers should be responsible for the impact of algorithmic components, construction and optimization of content

They should be accountable for business impact and KPIs (read: sign up for revenue), and to be responsible, algorithm developers need to own the algorithm and its development all the way to the customer.

Outro

Sometimes there will be tension between data science and other teams when it comes to developing algorithmic products, but it’s a healthy tension. The goal is to equip the algorithms to do things that a human couldn’t do by themselves (e.g. personalization and recommendations at scale), not just to automate something a human could do. Occasionally we encounter very interesting cases where algorithms and humans disagree which presents the challenge of earning trust and credibility. But over time, it’s great when we can demonstrate that algorithms can create really good outcomes. Especially when empowering people in a company you can make that case empirically through experimentation and using data to show that this actually makes customers happier.

Ending Note

A huge thanks to Rachel Moon on Data Science Doodles for taking the time to review this post and suggest improvements.

Note that I’m using the term data science very broadly to include data analysis, statistics, machine learning and AI.